By Jenna Somers

A five-year, $3.9 million grant from the National Institute on Deafness and Other Communication Disorders will support novel research into the brain function of language development in 4- to- 8-year-old late talkers with language delay to identify predictive biomarkers that could support early screening and treatment. The study is the first to longitudinally examine the brain basis of preschool language development in typically developing children and late talkers, according to James Booth, the study’s principal investigator and Patricia and Rodes Hart Professor of Educational Neuroscience at Vanderbilt Peabody College of education and human development.

Children with language delay are at risk of experiencing academic and socio-emotional problems, and Booth says about six percent of the population struggles with developmental language disorder, which can have negative consequences on reading and math achievement. Despite these outcomes, a behavioral or neural basis for predicting which children are at risk for developmental language disorder does not exist, a significant research gap that Booth’s team hopes to address.

“Language delay has long been a problem that has perplexed the field. There are kids who are late bloomers, who catch up to their peers—with some subtle differences in their learning—and then there are kids who are persistently delayed. We can’t predict who will fall into those two groups with behavioral measures alone. So, we want to know if we can use neural imaging to understand the fundamental processes of language development in the brain, and then use these neural measures along with behavioral measures to predict outcomes. We hope this work will support the development of interventions that target specific brain functions at specific time periods causing language delay,” Booth said.

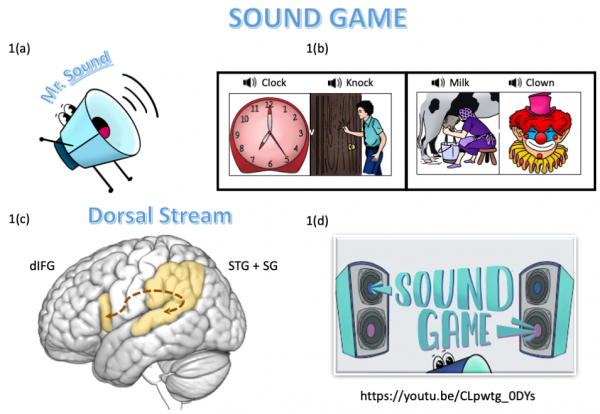

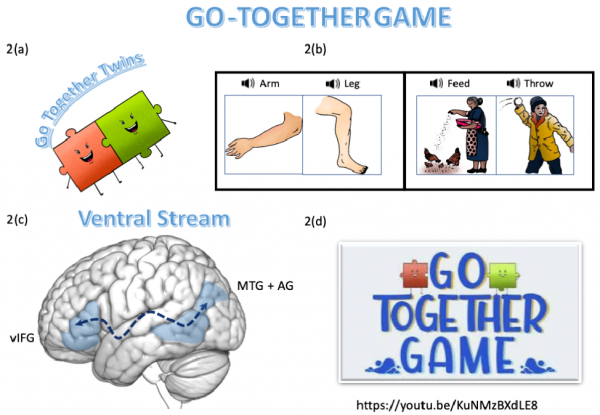

The research team will focus on two “streams” in the brain that support language processing. The superior (i.e. dorsal) stream supports the processing of sounds, and the inferior (i.e. ventral) stream supports the processing of word meaning. Booth and his team theorize that the superior stream drives language development initially, as children first acquire understanding of sounds, and sets the pace for the understanding of word meaning, which has a spurt of growth later in age. However, they believe these processes are also bidirectional over time, meaning a higher vocabulary could lead to a more nuanced understanding of sounds, which supports children’s ability to generalize about speech structure.

Understanding the long-term directional relationship between the development of skills in sound and meaning, and whether there are age differences in directional effects between the neural streams, have important implications for identifying language delay, knowing when to intervene, and whether treatment strategies should focus on sound-based or meaning-based brain mechanisms at certain time points.

Importantly, Booth notes that while late talking is often a characteristic of autism, this study will not include children with autism because the neural basis for autism is different from that for developmental language disorder, the latter of which concerns the structure of the language system in the brain.

“Autistic children, children with language delay, those with developmental language disorder, and those with other types of neural-based disorders all have late talking in common as a phenotype, but it wouldn’t be appropriate to deliver an autism intervention to a language impaired child or vice versa. We greatly need these neural measures to support accurate differential diagnoses and to ensure the most effective treatments are provided in accord with underlying conditions causing the late talking,” said Stephen Camarata, a co-investigator on the grant and professor of hearing and speech sciences at Vanderbilt School of Medicine and Vanderbilt University Medical Center. Camarata identifies and treats speech and language disorders, with specific interest in autism, Down Syndrome, phonological disorder, and language disorder.

David Cole, Patricia and Rodes Hart Professor of Psychology and Human Development, serves as a co-investigator on the grant providing his statistical expertise in longitudinal growth models, and Avantika Mathur, a senior research analyst in the Department of Psychology and Human Development coordinates the project and is an expert in data processing of neuroimages.

The team is in the process of recruiting child participants for the study. Parents of children who are two-and-a-half to three years old, know fewer than 250 words, and/or speak in no more than two-word phrases are invited to visit the study’s website to complete a questionnaire and learn more about whether their child is eligible.

Children who qualify to participate will initially visit the lab twice. In the first visit, when they are three-and-a-half to four-and-a-half years old, they will take behavioral assessments to measure speech, language, and intelligence. They will also practice playing sound and meaning games in a mock functional Magnetic Resonance Imaging scanner to familiarize themselves with the fMRI testing environment. On their second visit, the children will complete the games in a real fMRI scanner that will collect brain images as the children play the games. Children and their parents will be paid for participation and receive a report of their standardized testing and a picture of their brain. Children will re-visit the lab when they are between six and eight years old to allow the research team to measure longitudinal behavioral and neural changes.