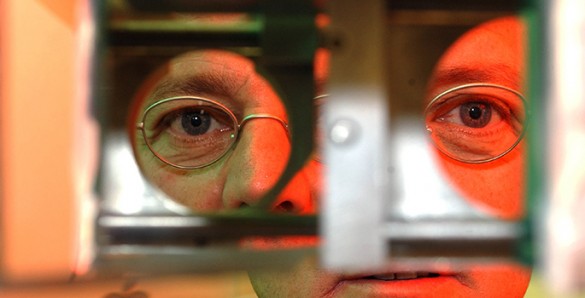

- Randolph Blake looking through the instrument he uses to test binocular rivalry: It uses a series of mirrors to present different images to each eye. (Neil Brake / Vanderbilt)

Musicians don’t just hear in tune, they also see in tune.

That is the conclusion of the latest scientific experiment designed to puzzle out how the brain creates an apparently seamless view of the external world based on the information it receives from the eyes.

“Our brain is remarkably efficient at putting us in touch with objects and events in our visual environment, indeed so good that the process seems automatic and effortless. In fact, the brain is continually operating like a clever detective, using clues to figure out what in the world we are looking at. And those clues come not only from what we see but also from other sources,” said Randolph Blake, Centennial Professor of Psychology at Vanderbilt University, who directed the study.

Scientists have known for some time that the brain exploits clues from sources outside of vision to figure out what we are seeing. For example, we tend to see what we expect to see based on past experience. Moreover, we tend to see what our other senses tell us might be present in the world, including what we hear. A remarkable example of this kind of bisensory influence is a beguiling visual illusion created by sound: When a person views a single flash of light accompanied by a pair of beeps presented in close succession, the individual incorrectly perceives two flashes, not just one.

“In our study we asked just how abstract can this supplementary information be?” Blake said.

The discovery of Blake and his colleagues that information as abstract as musical notation can affect what we see is reported this week in the Proceedings of the National Academy of Sciences Online Early Edition in an article titled “Melodic sound enhances visual awareness of congruent musical notes, but only if you can read music.” Blake’s co-authors are Associate Professor of Psychology Chai-Youn Kim and graduate students Minyoung Lee and Sujin Kim from Korea University in Seoul.

To answer their question, the researchers turned to a classical test called binocular rivalry that presents the brain with a clear visual conflict, which it struggles to resolve. The binocular rivalry effect is created by presenting incompatible images separately to each eye. Evidently, the brain can’t settle on a single image because the viewer’s perception fluctuates back and forth between the two images every few seconds.

In their study, the researchers presented participants with an array of moving contours in one eye and a scrolling musical score in the other. Participants pressed one button when seeing the contours and another button when seeing the musical score. As expected perception switched back and forth between the conflicting possibilities, with each view being perceptually dominant for roughly the same length of time.

Next the researchers played a simple melody through the headphones that their subjects wore as they performed the task. When they heard the music, they reported that they tended to spend more time watching the visual score and less time watching the moving contours.

For non-musicians it didn’t matter whether or not the melody being played matched the musical score that they were viewing. But the people able to read music reported watching the visual score for longer periods when the melody they were hearing was identical to the melody they were viewing than they did when the two were different.

A second key finding in the study was that the influence of the auditory melody on the predominance of the visual score disappears during the periods when the moving contours were dominant. The researchers found that playing the melody prolonged the periods when the musical score dominated a person’s perception but it did not cut short the periods when the moving contours were predominant. In other words, the musical melody and visual melody appear to be temporarily uncoupled when the visual member of the pair is temporarily erased from awareness.

“What this tells us is that the kind of information the brain uses to interpret what we see around us includes abstract symbolic input such as music notation,” said Blake. “”However, this kind of input is only effective while an individual is aware of it.”

The research was funded by the National Research Foundation of Korea grants NRF-2013R1A1A1010923 and NRF-2013R1A2A2A03017022 and National Institutes of Health grant P30-EY008126.